Since 2021, I’ve been a research scientist at Waabi, where I work on simulation. My research focuses on developing scalable techniques for simulation, and on bringing generative AI and neural rendering into the physical world through mixed reality.

I am currently building a team to tackle mixed reality and fast, large-scale neural rendering. If you have a strong background in graphics, game development, computer vision, or neural rendering, please e-mail me your resume! Waabi is growing, please see here for a complete list of openings.

Before Waabi, I spent three wonderful years as a researcher at Uber Advanced Technologies Group (ATG) Toronto, working on applying my research to the challenges associated with autonomous driving in the real world—from state estimation to perception and prediction.

In October 2024 I completed my PhD in the Machine Learning Group at the University of Toronto under the supervision of Professor Raquel Urtasun. My PhD research focused on computer vision and deep learning for robotics and long-term autonomy.

Education

- PhD in Computer Science from the University of Toronto (2017–2024). Thesis title: Learning Rich Representations for Robot State Estimation (mirror)

- MSc. in Computer Science with Distinction from ETH Zürich (2015–2017). Thesis title: Simultaneous localization and mapping in dynamic scenes

- BSc. in Applied Computer Science from Transilvania University, Brașov, Romania (2011–2014)

Publications

Note: *denotes equal contribution.

Web PDF BibTeX Poster

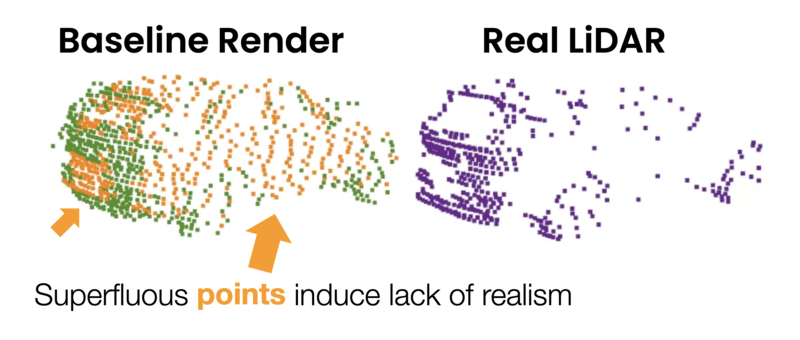

TL;DR: We propose a thorough methodology for evaluating how realistic a self-driving vehicle simulator is, and use it to quantify the importance of reflectance modeling and other factors when simulating LiDAR.

Note: *denotes equal contribution.

Web PDF BibTeX

TL;DR: Automatically build "game-ready" rigged 3D meshes from observed data by optimizing vertices and material properties from a template mesh.

Note: *denotes equal contribution.

Web PDF (arXiv) BibTeX Poster Video (Download) Video (YouTube)

TL;DR:

We show that object detection and prediction systems for self-driving cars can

tolerate relatively large sensor-to-map misalignments (up to 0.5m) without

errors increasing too much. However, motion planning is much more sensitive.

We propose a lightweight 2ms-overhead multi-task approach to correct the pose

and increase resilience to localization errors.

Note: *denotes equal contribution.

PDF (arXiv) BibTeX Code

TL; DR:

A simple yet effective low-overhead method for compressing neural network weights using a form of

product quantization.

By permuting (Step 1) the rows of the weight matrices in an optimal way, we can maximize the effectiveness of

quantization (Step 2). The key ingredient is fine-tuning (Step 3) the dictionary post-quantization, while keeping

the weight codes fixed. This can be done using vanilla autodiff, e.g., in PyTorch.

We show this approach maintains the vast majority of the original networks' performance on classification and

object detection, while reducing the memory footprint of their parameters by nearly 20x. The code is open source.

Note: *denotes equal contribution.

Web PDF (arXiv) BibTeX Talk Video (YouTube) Results Video

TL;DR: We analyze Simultaneous Localization and Mapping (SLAM) in a setting where multiple cameras are

attached to a robot but fire at different times, e.g., by following a spinning LiDAR. We extend a classic SLAM

formulation with a continuous time motion model that integrates these asynchronous observations robustly and

efficiently. Our system robustly initializes and tracks its pose in crowded environments and closes loops using

all camera information.

We evaluate our method on a new large-scale multi-camera SLAM benchmark and demonstrate the benefits of

asynchronous sensor modeling at scale.

International Conference on Intelligent Robots and Systems (IROS) 2020

Best Application Paper Finalist!

Web PDF (arXiv) BibTeX Play with it! Overview Video (90s) IROS Talk (15min) Code

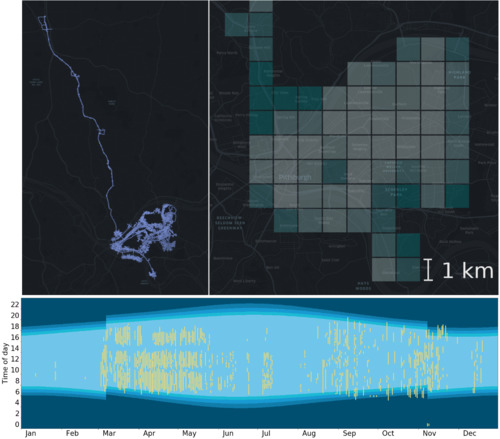

TL;DR: A new self-driving dataset containing >30M HD images and LiDAR sweeps covering Pittsburgh

over one year, all with centimeter-level pose accuracy. We investigate the potential of

retrieval-based localization in this setting, and show that simple architecture (e.g., ResNet + global pool) perform

surprisingly well, outperforming more complex architectures like NetVLAD.

The figure shows the geographic (top) and temporal (bottom, x = date, y = time of day) extent of the data.

International Conference on Intelligent Robots and Systems (IROS) 2019

Note: *denotes equal contribution.

PDF (arXiv) BibTeX Talk Slides (PDF) Talk Slides (Apple Keynote) Video

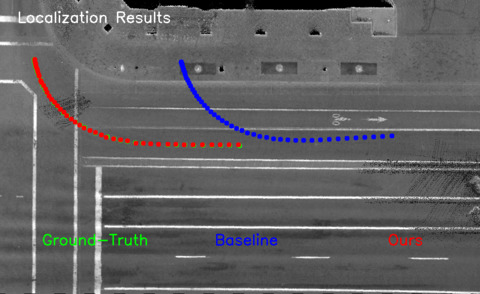

TL;DR: We use very sparse maps consisting in lane graphs (i.e., polylines) and stored traffic sign positions to localize autonomous vehicles. These maps take up ~0.5MiB/km2, compared to, e.g., LiDAR ground intensity images which can take >100MiB/km2. We use these maps in the context of a histogram filter localizer, and show median lateral accuracy of 0.05m and median longitudinal accuracy of 1.12m on a highway dataset.

International Conference on Computer Vision and Pattern Recognition (CVPR) 2019

Note: *denotes equal contribution.

PDF PDF (arXiv) BibTeX Poster Video

TL;DR: High-resolution maps can take up a lot of storage. We use neural networks to perform task-specific compression to address this issue by learning a special-purpose compression scheme specifically for localization. We achieve two orders of magnitude of improvement over traditional methods like WebP, as well as less than half the bitrate of a general-purpose learning-based compression scheme. For reference, PNG takes up 700× more storage on our dataset.

Note: *denotes equal contribution.

PDF BibTeX Poster Talk Slides (PDF) Video

TL;DR: Matching-based localization methods using LiDAR can provide centimeter-level accuracy, but require careful beam intensity calibration in order to perform well. In this paper, we cast the matching problem as a learning task and show that it is possible to learn to match online LiDAR observations to a known map without calibrated intensities.

IEEE International Conference on Robotics and Automation (ICRA) 2018

Web PDF BibTeX Poster Code

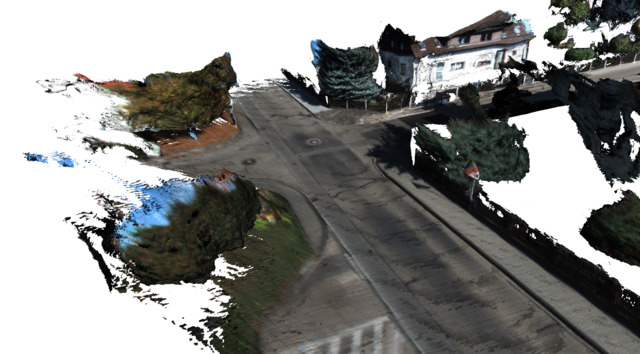

TL;DR: A system for outdoor online mapping using a stereo camera capable of also reconstructing the dynamic objects it encounters, in addition to the static map. Supports map pruning to eliminate stereo artifacts and reduce memory consumption to less than half.

Work Experience

Industry

- Full-time senior scientist at a Waabi (Mar 2021–Present).

- Full-time research scientist at Uber ATG Toronto (Jan 2018–Feb 2021).

- Helping develop scalable and robust centimeter-accurate localization methods for self-driving cars.

- LiDAR-based map localization, visual localization, learning-based compression, large-scale machine learning (Apache Spark).

- Multi-Task Learning for autonomous driving with a focus on real-time operation.

- Data engineering; petabyte-scale data ingestion, curation, and benchmark selection.

- Previously, I did a series of software engineering internships in the US

during my undergrad:

- Internship: Twitter (Summer 2015, San Francisco, CA), Performance Ads

- Developed Apache Storm and Hadoop data pipelines using Scala.

- Internship: Google (Summer 2014, New York, NY), Data Protection

- Co-developed a system for performing security-oriented static analysis of shell scripts used to run large numbers of cluster jobs.

- Internship: Microsoft (Summer 2013, Redmond, WA), Server and Tools Business

- Security and reliability analysis of a web service part of the Azure portal.

- Internship: Twitter (Summer 2015, San Francisco, CA), Performance Ads

Academic

- Peer review: IJCV 2021, CVPR (2021–present), ECCV/ICCV (2020–present), NeurIPS (2022), ICLR 2022, ICRA (2019, 2021–present), IROS (2019–present), CoRL 2020, AAAI 2021, RA-L (2020–present)

- Acknowledged as one of the top reviewers for ECCV 2020 (top 7.5%) and ECCV 2022.

- Acknowledged as an outstanding reviewer for CVPR 2021.

- Acknowledged as one of the top reviewers for NeurIPS 2022.

- Teaching Assistant: Image Analysis and Understanding (CSC420), University of Toronto, Fall 2017.

Talks

- Embodied AI for Autonomous Vehicles: From Open-Loop Benchmarks to Closed-Loop Simulation (Guest Lecture at NYU, 2025-04-10)

- Guest lecture at NYU’s Courant Institute, part of DS-GA.3001 Advanced Topics in Embodied Learning and Vision (Spring 2025, Prof. Mengye Ren).

- All About Self-Driving CVPR 2024 Tutorial (Speaker and Co-Organizer, 2024-06-18)

- I once again co-organized Waabi’s CVPR 2024 Tutorial on self-driving cars.

- I will cover topics related to hardware (LiDAR, RTK, RADAR, cameras) as well as software (localization and mapping). Check out the tutorial website for more information!

- Since last year’s instance, countless new and promising avenues of research have started gaining traction, and we have updated our tutorial accordingly. To name a few example, this includes topics like occupancy forecasting, self-supervised learning, foundation models, the rise of Gaussian Splatting and diffusion models for simulation as well as the study of closed-loop vs. open-loop evaluation.

- Guest Talk at the UTRA Hackathon at the University of Toronto (2024-01-20) (Hackathon homepage)

- All About Self-Driving CVPR 2023 Tutorial (Speaker and Co-Organizer, 2023-06-19)

- I co-organized the CVPR 2023 Tutorial on self-driving cars organized by Waabi. Our tutorial got featured in CVPR Daily magazine! [mirror]

- I covered topics related to hardware (LiDAR, RTK, RADAR, cameras) as well as software (localization and mapping). Check out the tutorial website for more information!

- On Simulation and Localization for Autonomous Robots (Guest Lecture at the University of Tartu, 2023-03-24)

- All About Self-Driving CVPR 2021 Tutorial (Speaker, 2021-06-20)

- I was a speaker at the CVPR 2021 Tutorial on self-driving cars organized by Waabi.

- I covered the same topics as last year, with some additional material on RTKs and localization.

-

Scaling Up Precise Localization for Autonomous Robots (DevTalks Reimagined 2021)

- The talk video is available on YouTube

- DevTalks website: devtalks.ro/speakers

- Keynote Slides (83MB)

- PDF Slides (no videos, 3.6MB)

-

All About Self-Driving CVPR2020 Tutorial (Speaker, 2020-06-14)

- I was a speaker at the CVPR2020 Tutorial on self-driving cars organized by our lab.

- I talked about hardware with Davi Frossard and localization with Julieta Martinez and Shenlong Wang. (Including a crash course on Monte Carlo localization!) All the videos from the tutorial are available on its website or directly on YouTube in this playlist.

-

[PDF Slides] Unsupervised Sequence Forecasting of 100,000 Points for Unsupervised Trajectory Forecasting

(2020-04-10)

- Paper I presented: [PDF] Weng et al., 2020

-

[PDF Slides] Deep Point Cloud Registration

(2019-09-12)

- In this talk, I give a brief overview of recent advances in learning-based methods for robust point cloud registration, including L3-Net, DeepVCP, and Deep Closest Point. I cover the main ideas in these papers, as well as their strengths and weaknesses, and discuss some insights and possible avenues for future research.

-

[PDF Slides] Shared Autonomy via Deep Reinforcement Learning

(2019-02-22)

- Paper I presented: [PDF] Reddy et al., RSS 2018

- Seminar Presentation for CSC2621HS at UofT (Imitation Learning for Robotics)

-

[PDF Slides] Geometry-Aware Learning Methods for Computer Vision

(2019-01-18)

- This talk was the first part of my PhD’s qualifying oral examination. It’s a bit barebones since it was meant to support the examination itself (i.e., lots of discussing beyond the slides), but may still be of interest.

Miscellaneous

- A short blog post with a few tips on making academic videos

- Yeti, an OpenGL 3D game engine with forward and deferred rendering support, real time shadow mapping and more.

Bio

Before starting my PhD, I completed my Master’s in Computer Science at ETH Zurich. For my Master’s Thesis, I developed DynSLAM, a dense mapping system capable of simultaneously reconstructing dynamic and potentially dynamic objects encountered in an environment, in addition to the background map, using just stereo input. More details can be found on the DynSLAM project page.

Previously, while doing my undergraduate studies at Transilvania University, in Brașov, Romania, I interned at Microsoft (2013, Redmond, WA), Google (2014, New York, NY) and Twitter (2015, San Francisco, CA), working on projects related to privacy, data protection, and data pipeline engineering.

I am originally from Brașov, Romania, a lovely little town which I encourage everybody to visit, together with the rest of Southeast Europe.

Contact

Email me at andrei.ioan.barsan (at) gmail (dawt) com.

Find me on GitHub, Google Scholar, LinkedIn, or StackOverflow.